Multi-agent coding sounds complex, but the core concept is straightforward: more than one AI working at the same time, without stepping on each other. This is not about replacing engineers or automating away the craft of software development. It is about removing artificial limits on how work flows through a team.

The numbers tell the story of why this matters now. According to recent industry data, monthly code pushes have climbed past 82 million, merged pull requests reached 43 million, and 41% of new code now originates from AI-assisted generation. AI adoption has reached 84% of all developers, marking the fastest acceleration of code creation the industry has ever recorded. Yet delivery timelines have not improved proportionally, and in many cases they have gotten worse. The bottleneck has simply moved.

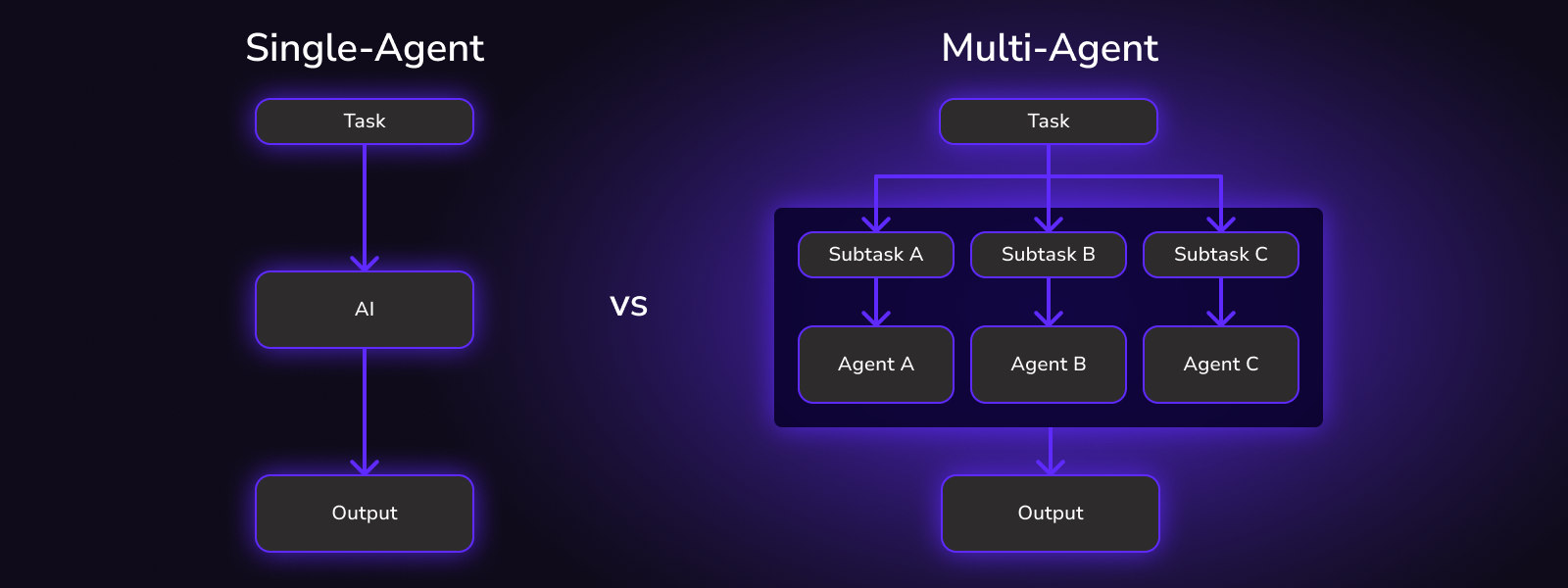

In a single-agent workflow, one AI assistant handles one task in one context, producing one stream of changes. The developer waits for output, reviews it, iterates, and eventually moves on to the next task. This model made sense when AI assistance was novel, but it creates a fundamental constraint: everything happens sequentially, even when the work itself could happen in parallel.

Multi-agent workflows change this dynamic. Multiple AI assistants work simultaneously, each operating in an isolated context with its own branch and working directory. Each agent focuses on a clearly defined task—a bug fix, a new feature, a refactor—while the developer orchestrates and reviews. The work that would have queued up behind a single agent now runs concurrently.

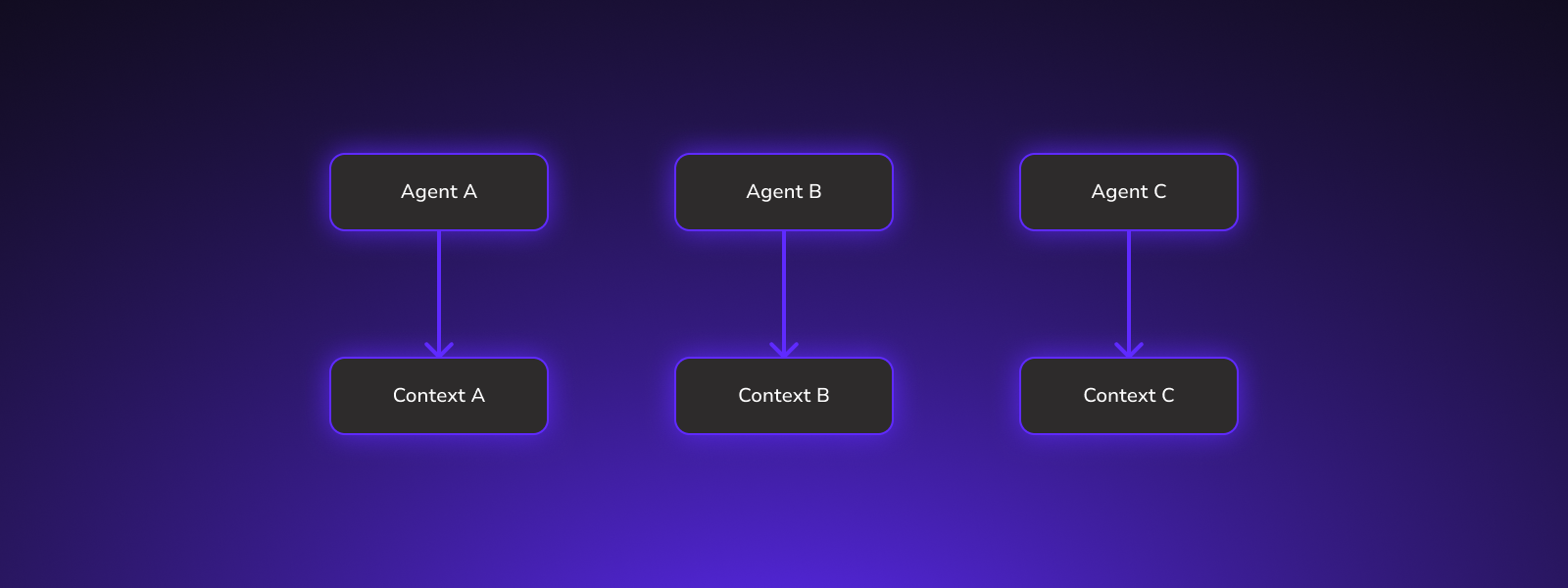

Multi-agent systems only work if the agents are properly isolated from each other. Without isolation, multiple agents attempting to work on the same codebase will create conflicts, overwrite each other's changes, and produce chaos rather than productivity.

As the Nx engineering blog explains: "When you force Claude Code to context-switch between branches, you're throwing away its most valuable asset: the deep understanding it has built up about your specific codebase, your patterns, your goals."

Git worktrees have emerged as the standard solution for this problem. They allow developers to check out multiple branches of the same repository simultaneously in different directories, each linked to the same underlying git history. This means each AI agent can have its own isolated working environment with stable file paths, consistent build state, and no risk of interfering with other agents' work.

Isolation means separate branches, clean diffs, no shared state, and no accidental overwrites. One developer described running five active worktrees in a large monorepo: one for updating components, another for refactoring an API, a third for documentation, and two more for features and performance work. Each worktree had its own AI agent instance running independently. The result is faster feedback, less friction, and cleaner merges when the work comes back together.

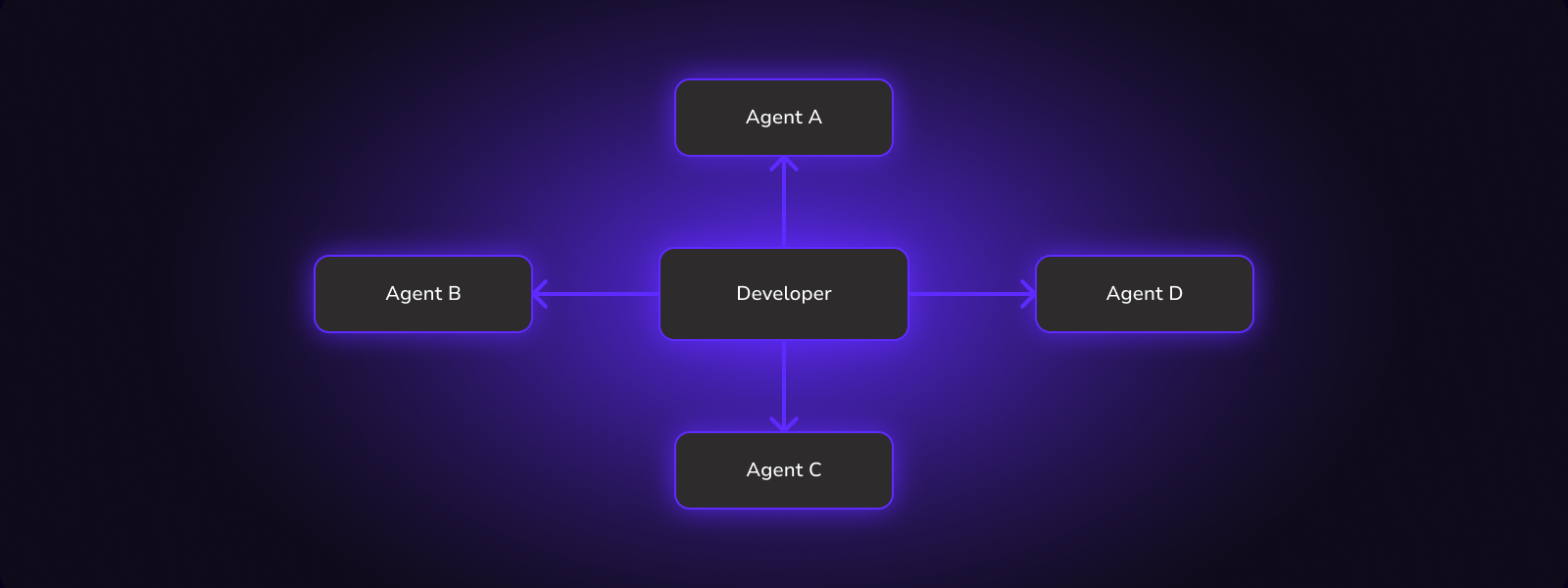

In a multi-agent workflow, the developer's role shifts from implementer to orchestrator. Rather than writing code directly or babysitting a single AI through a task, developers define the work, launch agents to execute it, review the outcomes, and decide what ships.

This shift reflects a broader change happening across the industry. Recent research suggests that AI-assisted programming moves the mental burden from code generation to the more demanding task of code evaluation. The primary bottleneck in development is no longer writing code; it is validating, comprehending, and integrating the code that AI systems produce.

Multi-agent workflows align with this reality rather than fighting it. By breaking work into smaller, focused tasks distributed across multiple agents, the resulting diffs are smaller and more reviewable. Developers can compare different approaches to the same problem and reject solutions that do not meet their standards without losing significant time.

The urgency comes from a capacity problem that is already affecting teams across the industry. Engineering organizations report that while AI coding tools deliver 2-5x faster code generation, overall delivery timelines often remain unchanged or even increase. Code review overhead has grown substantially, with reviews for AI-heavy pull requests taking 26% longer as reviewers must check for AI-specific issues like inappropriate pattern usage and architectural misalignment.

The uncomfortable truth is that teams are now writing code faster than they can review it. Senior engineers find themselves overwhelmed by the volume of AI-generated code that, while syntactically correct, raises questions about architecture, maintainability, and alignment with system design. Review capacity has become the limiting factor for delivery performance.

Multi-agent workflows do not eliminate this review burden, but they make it manageable. Smaller, focused changes are easier to evaluate than large, sprawling pull requests. Running multiple approaches in parallel allows comparison rather than serial iteration. And the explicit orchestration role gives developers a framework for deciding what deserves attention and what can be rejected quickly.

That is how teams scale AI assistance without losing control: not by generating code faster, but by structuring the work so that human judgment can keep pace with machine output.