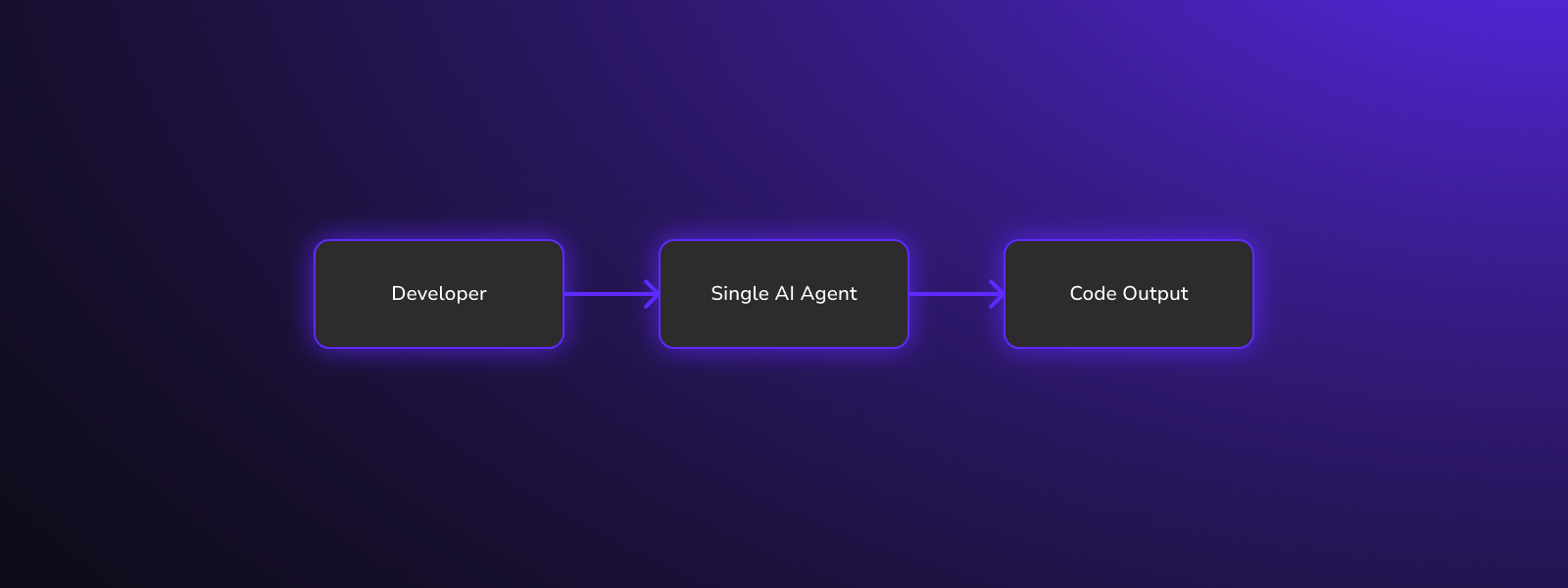

AI pair programming represented a genuine breakthrough in developer productivity, but the paradigm is already showing its limitations. Most AI coding tools still operate under a single-agent assumption, where one AI handles one task and produces one stream of output at a time. While this model seemed revolutionary when AI-assisted coding was novel, it has become the primary bottleneck in modern development workflows.

The evidence for this is striking. A METR study from July 2025 conducted a randomized controlled trial and found that AI tools actually made experienced developers 19% slower, despite those same developers expecting a 24% speedup before the study began. The culprits were familiar to anyone who has spent time with these tools: waiting for responses, context-switching between tasks, and over-reliance on a single stream of AI output that may or may not be heading in the right direction.

Software development has never been a purely serial activity. At any given moment, engineers are juggling bugs, features, refactors, and experiments that all compete for attention. Single-agent workflows force teams to serialize work that would naturally run in parallel, creating artificial constraints that compound over time.

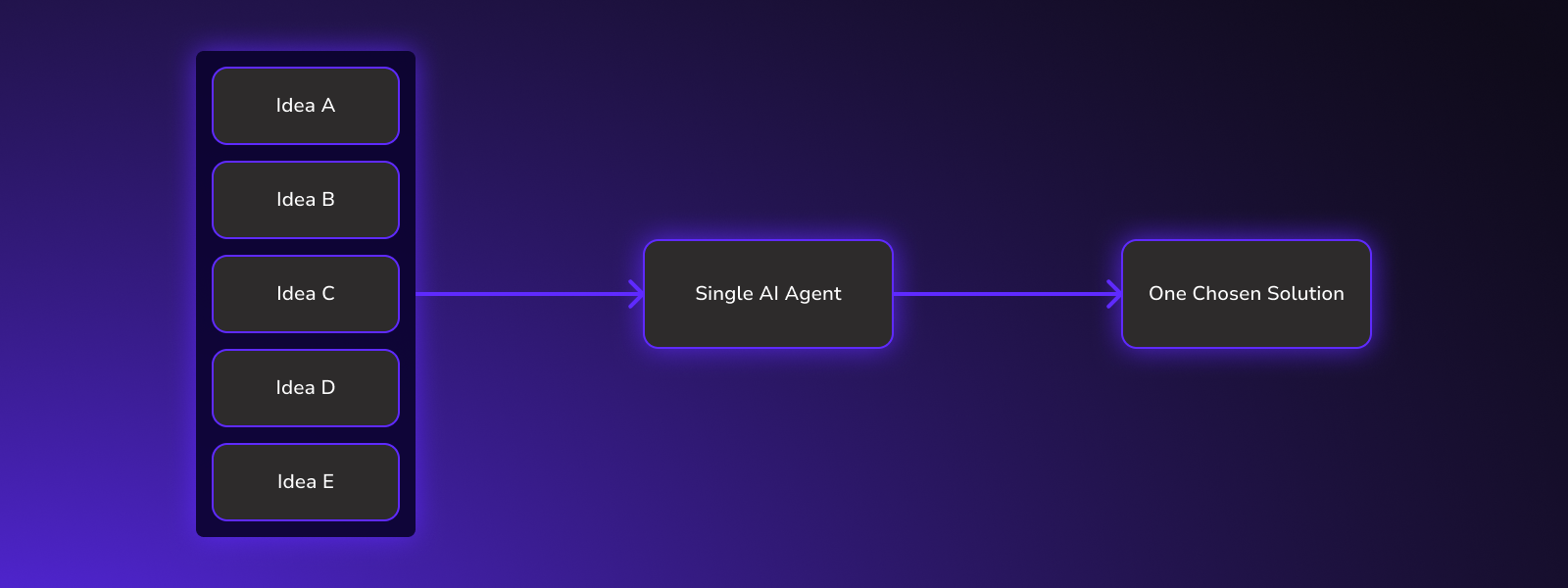

When teams rely on one AI assistant at a time, they inadvertently introduce several forms of friction into their development process. There is idle time while waiting for AI to generate responses, and these delays—often 5-10 seconds per interaction—accumulate across hundreds of daily exchanges. Long feedback loops develop that interrupt concentration and kill momentum. Engineers tend to over-invest in the first solution the AI produces rather than exploring alternatives that might be superior. And when that first solution turns out to be wrong, the sunk cost is higher because no parallel exploration was happening in the background.

Engineers naturally think in parallel terms. They make mental notes to fix a bug later while continuing on a feature. They want to try two different approaches and compare the results. They would prefer to explore a new idea while waiting for tests to run on existing code. Single-agent AI tools flatten this parallel thinking into a sequential queue, forcing developers to complete one task before starting another.

This mismatch between how developers think and how their tools operate explains why AI assistance often feels simultaneously powerful and frustrating. The capability is clearly there, but the workflow architecture forces engineers back into serial execution patterns that feel artificially constrained.

The stakes of this architectural choice are becoming clearer as the market matures. Teams that continue down the single-agent path will find themselves falling behind, and the gap will widen faster than many expect.

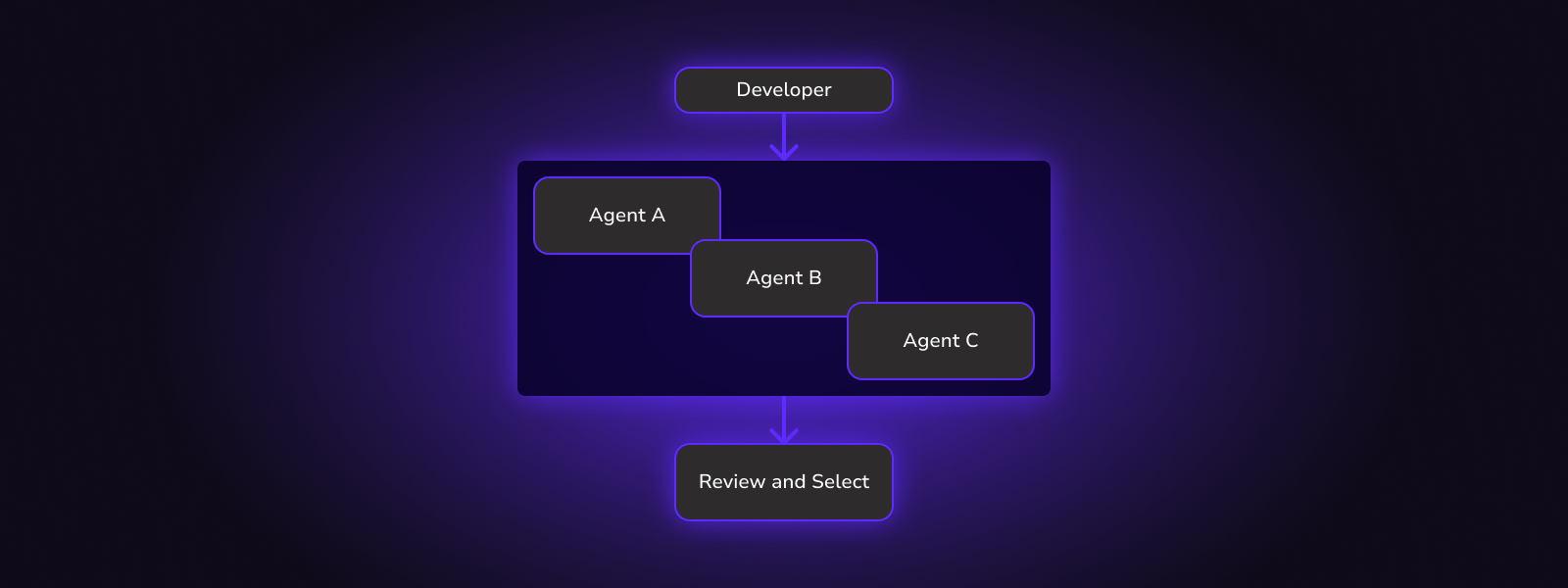

Consider the competitive dynamics: while a team using single-agent workflows waits for one AI to finish generating code, their competitors may be running five agents in parallel, each exploring a different approach on isolated branches. Those parallel teams are reporting that they can ship quarterly roadmaps in weeks rather than months. They are achieving product-market fit while serial teams are still debugging their first AI-generated pull request.

The productivity gap between serial and parallel AI workflows is not incremental; it compounds over time as parallel teams accumulate learnings and ship features at a fundamentally different rate.

As InfoWorld observed: "The biggest productivity gains don't come from faster typing or better autocomplete. They come from parallelism."

Parallel AI coding inverts the traditional model in several important ways. Multiple agents work simultaneously rather than sequentially. Each agent operates in an isolated context that does not interfere with the others. Independent branches allow for safe experimentation without risking the stability of the main codebase. And human-led orchestration keeps everything aligned with the actual goals of the project.

Rather than asking one AI to produce a perfect solution on the first attempt, teams using parallel workflows ask multiple AIs to explore the solution space, and then select the best result from among their efforts. This approach treats AI-generated code as a starting point for evaluation rather than a finished product, which aligns more closely with how experienced engineers have always approached uncertain technical problems.

Model improvements will undoubtedly continue, and future AI systems will be more capable than current ones. However, the more significant gains are likely to come from how AI is applied within development workflows rather than from raw model performance on benchmarks.

Single-agent AI coding was the first chapter in this story. Parallel, orchestrated AI represents the next phase of evolution. The teams that recognize this shift and adapt their workflows accordingly will be well-positioned to define the next era of software development. Those that do not may find themselves struggling to understand why their AI tools seem to be making them slower rather than faster.